Textbook:Sutton and Barton reinforcement learning

周博磊老师中文课

coding

架构:Pytorch

与supervised learning 的区别:监督学习:1.假设数据之间无关联i.i.d. 2.有label

强化学习:不一定i.i.d;没有立刻feed back(delay reward)

exploration(采取新行为)&exploitation(采取目前最好的行为)

feature:

- Trial-and-error exploration

- Delay reward

- time matters(sequential ,non i.i.d)

- Agent’s actions affect the subsequential data it recieves

compared with supervised learning,reinforcement learaning can sometimes surpass the behavior of human

possible rollout sequence

agent&environment

rewards:scalar feedback

sequential decision making:

近期与远期奖励的trade off

full observation&partial observation

RL Agent:

component:

1.policy:agent’s behavior function

from state/observation to action

stochastic policy:Probabilistic sample: π ( a ∣ s ) = P [ A t = a ∣ S t = s ] \pi(a|s)=P[A_t=a|S_t=s] π(a∣s)=P[At=a∣St=s]

deterministic policy: a ∗ = a r g m a x a π ( a ∣ s ) a^*=arg\underset{a}{max}\,\pi(a|s) a∗=argamaxπ(a∣s)

2.value function:

expected discounted sum of future rewards under a particular policy π \pi π

discount factor weights immediate vs future rewards

used to quantify goodness/badness of states and actions

v

π

(

s

)

=

△

E

π

[

G

t

]

=

E

π

[

∑

k

=

0

γ

k

R

t

+

k

+

1

∣

S

t

=

s

]

v_{\pi}(s)\overset{\triangle}=E_\pi[G_t]=E_\pi[\sum_{k=0}\gamma^kR_{t+k+1}|S_t=s]

vπ(s)=△Eπ[Gt]=Eπ[k=0∑γkRt+k+1∣St=s]

Q-function(use to select among actions):

q

π

(

s

,

a

)

=

△

E

π

[

G

T

∣

S

t

,

A

t

=

a

]

q_\pi(s,a)\overset{\triangle}=E_\pi[G_T|S_t,A_t=a]

qπ(s,a)=△Eπ[GT∣St,At=a]

5.Model

A model predicts what the environment will do next

Types of RL Agents based on what the Agent Learns

Value-based agent

显示学习价值函数、隐式学习策略

Policy-based agent

显示学习policy、no value function

Actor-critic agent

结合policy and value function

Types of RL Agents based on what the Agent Learns

Model-based

直接学习model(环境转移)

Model-free

直接学习value function/policy function

No model

Exploration and Exploitation

exploration:进行试错

exploitation:选择已知情况下的最优

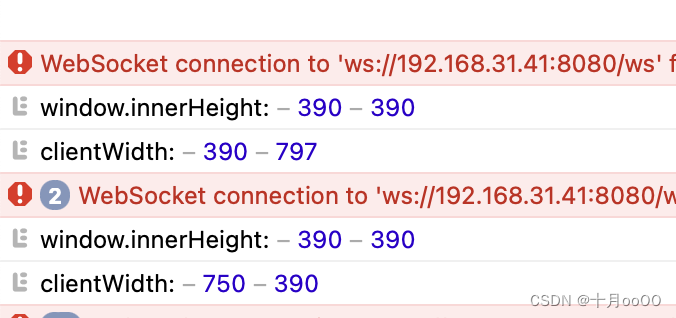

import gym

#这个锤子在python里面跑,一跑就卡...

env=gym.make('CartPole-v0')

env.reset()

env.render()

action=env.action_space.sample()

observation,reward,done,info=env.step(action)

exmaple

next calss:Markov决策过程